Neural Scaling Laws and Allometry: coincidence or more?

In my pursuit in 1) understanding, 2) pushing forward human and machine intelligence I came to make the following connection between the biological and the (current) digital realm.

At the current state of affairs, this is more of an observation post than an explanation -- given that there is any correlation at all. Additionally, I will raise a number of questions that could interesting to explore.

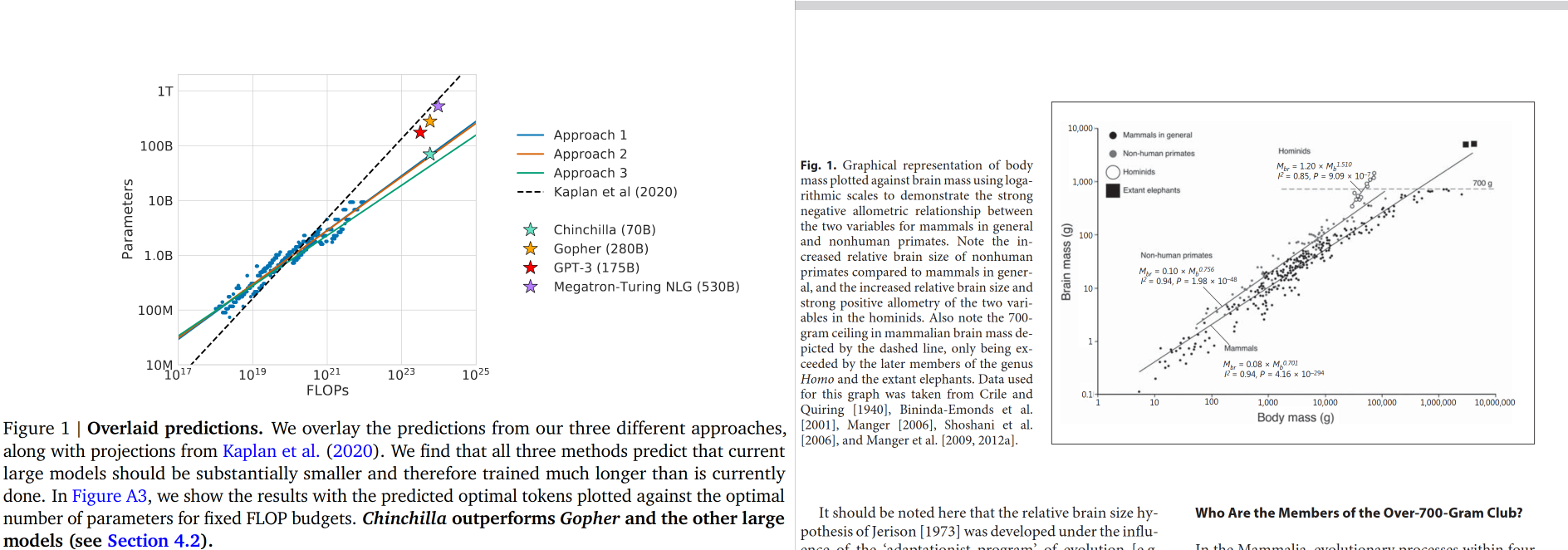

Left image plot source: Training Compute-Optimal Large Language Models -- Plot is on log(FLOPs) vs. log(model size)

Right image plot source: The Evolutions of Large Brain Size in Mammals: The 'Over-700-Gram Club Quartet'

Plot is on log(body mass) vs. log(brain mass)

-

Given the state of affairs, I would like to draw the attention on the two outlier clusters formed by, 1) the models: GPT-3, Gopher, Megatron-Turing NLG on the left side and, 2) the mammalian brains of hominids on the right side.

-

The case of Chinchilla (70B, 1.4T) is interesting because contrary to the other models, it is under-parameterized and over-trained.

-

One can also wonder about the necessity of embodiement? Once again, human intelligence does NOT equate artificial intelligence i.e. roughly speaking, so-called human intelligence in-silico! The term of machine intelligence may be less error-prone for some, consider looking into: Universal Intelligence: A Definition of Machine Intelligence

-

As for a possible explanation of this observation, different lenses can be used -- again, if there is more than an apparent connection.